Addressing the darkness under the lighthouse

(灯台下暗し)

DENNOU AI

We chose "Dennou" (電脳) because it means "cyber brain" in Japanese. It reflects what we're after - tools that not only work alongside people, but elevate. Ada Lovelace saw computers as partners that could help us think and create in new ways, not just as fancy calculators. Our streamlined approach addresses the 灯台下暗し - shining light on the overlooked potential right beneath us. That's exactly what we're building.

REDEFINING BUSINESS AI

AI Without the Empty Promises

We've seen too many businesses burned by over-hyped AI solutions that cost a fortune and deliver very little.

Cutting Through the Hype

We don't do AI for AI's sake. We solve actual problems. Most vendors start with technology and try to find a use for it.

We do the opposite - start with your problem, then figure out if AI can help

Built on Real Experience

Our team has worked with the Gates Foundation, National Physics Laboratory, European Space Agency, and major universities. We've earned our stripes building AI that works in the real world

Made for Businesses Like Yours

Most AI solutions are built for tech giants with endless resources.

We build for businesses that need realistic results with enterprise level reliability. Whilst ensuring our solutions deliver on security and privacy

PRACTICAL AI SOLUTIONS FOR REAL PROBLEMS

Solutions That Work Monday Morning

No more AI projects that look impressive in demos but fall apart in the real world

AI That Actually Fits Your Business

Turn manuals into knowledge that actually helps.Set up smart agents that handle the simple stuff.Use image recognition to diagnose problems customers show you.Make sure complex cases reach the right human, triaged and fast.Cut resolution time by up to 60%.Streamline workflows you already have.Focus on measurable improvements, not buzzwords.

Consulting That Makes Sense

Get straight talk about what AI can (and can't) do for you.Develop implementation plans that work with your actual resources.Find patterns in your data that point to real opportunities.Build tools that solve specific headaches.Focus on tangible outcomes like cost reduction.Build a roadmap that doesn't require an army of specialists.

THE DENNOU AI DIFFERENCE

Let's talk about cutting costs while making your customers happier.Schedule your free consultation. Let's figure out what practical AI can do for your business—no hype, no jargon, just honest expertise.

© Dennou AI. All rights reserved.

Casino Royale: The House Always Wins

In the glittering new casino of AI, the house always wins. Are you sure you're not the one paying for the table?Businesses are rushing to place their bets on third-party AI platforms, chasing quick jackpots in productivity and efficiency.But very few are asking what happens "behind the curtain."- Who truly owns the intelligence created from your data?- What happens when the house learns your winning strategy?- Is your "promise" of data privacy just good marketing?Before you push more of your chips onto the table, you need to understand the rules of the game. I’ve detailed my thoughts below.

Your Most Valuable Player is a liability. It's time to use them to help build better armor.

What started out as a quick and dirty demo turned into a fully fledged production pipeline. By systematically working through and optimizing the system design and architecture, we're now performing demos to clients with a scaled-down version of a production-ready pipeline.The feedback for all this extra work? Transparency, trust, attention to detail has been the consistent client response. Them seeing us as passionate about their data as they are is simply infectious.We've taken the classic philosophy of samurai armor and re-engineered it for the 21st century. Instead of layering heavy steel, we've used enterprise-grade data engineering to build a flexible chassis, then interlaced it with the lightest, most advanced AI. The result isn't just armor; it's a bespoke tactical suit optimized for the speed and precision of John Wick. It's brutally effective, yet because it's 80% data pipeline, it's also modular and adaptable to your changing business needs.

Your Most Valuable Player is Your Biggest Liability. AI Can Fix That.

In engineering, we have a term for this: the "bus factor." It measures how many key people would need to be hit by a bus to derail a project. When that number is one, you don’t have an MVP; you have a single point of failure.We experienced this firsthand when we spent six months trying to reverse-engineer a critical process after an expert left. An AI we built did it in seconds. This highlighted a clear lesson: the solution to human knowledge gaps had to be technological.But which technology? The traditional business response is to throw people and money at the problem by hiring consultants. This is a temporary fix at best—a costly exercise that produces manual reports report, allowing you to make decision with stale data.The modern tech response is equally flawed: "just throw it at a big LLM." This too is a band-aid, failing for three critical reasons:

It Doesn't Scale

An LLM might handle one 500-page document. But what about 200 of them? The context windows break, and the API costs become astronomical. It’s simply not built for enterprise-scale knowledge.Your IP is at Risk

Are you comfortable uploading your core intellectual property... your "secret sauce" freely given to a third-party AI provider? For most businesses, the answer is a hard no.It's a Black Box.

Off-the-shelf AI solutions give you an answer, but no reasoning. There’s little-to-no source tracing. When it fails, you have no idea why, and you have almost zero options for customization. It either works or it doesn't, and that’s not good enough.

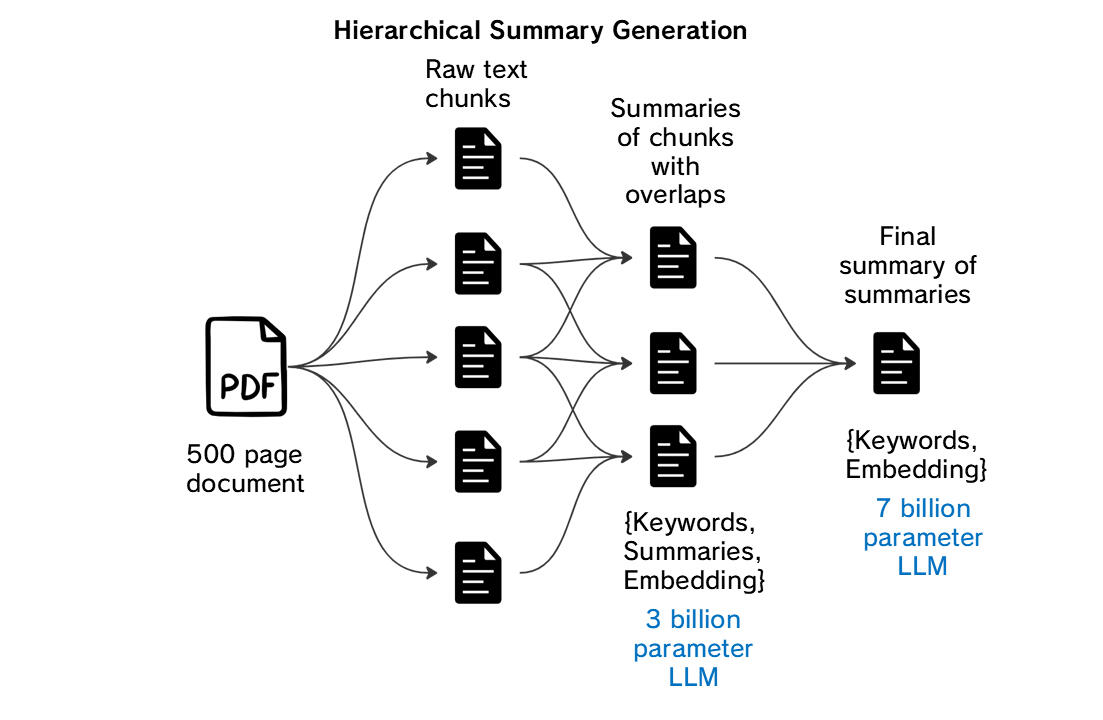

The Solution: Hierarchical Summary Generation

Lets step through the core architecture.

Systematic information distillation that preserves accuracy while enabling fast retrieval.

Layer 1: Chunk Processing

- Split documents into overlapping segments (25% of text chunks make it into other summaries)

- Individual LLM processing per chunk

- Extract keywords and entities from each

Why this matters: No detail gets lost to context window limits. Each piece gets full attention.Layer 2: Dynamic Batch Synthesis

- Group chunks into digestible batches (~20 summaries)

- Recursive layering—batch summaries get re-batched and re-summarized

- Vector embeddings done on summary population

- Continuous keyword aggregation across layers

Why this matters: Mimics human information processing. Progressive meaning-building vs. naive concatenation.Layer 3: Final Summary

- Single executive overview from condensed summaries

- Master keyword list from entire document

- Becomes your catalog metadata

Production Implementation

Storage pattern:

- Images/assets → Cloud storage with metadata links & schematics to text generation

- Chunk summaries → Document-specific BigQuery tables

- Final summaries + keywords → Central catalog table

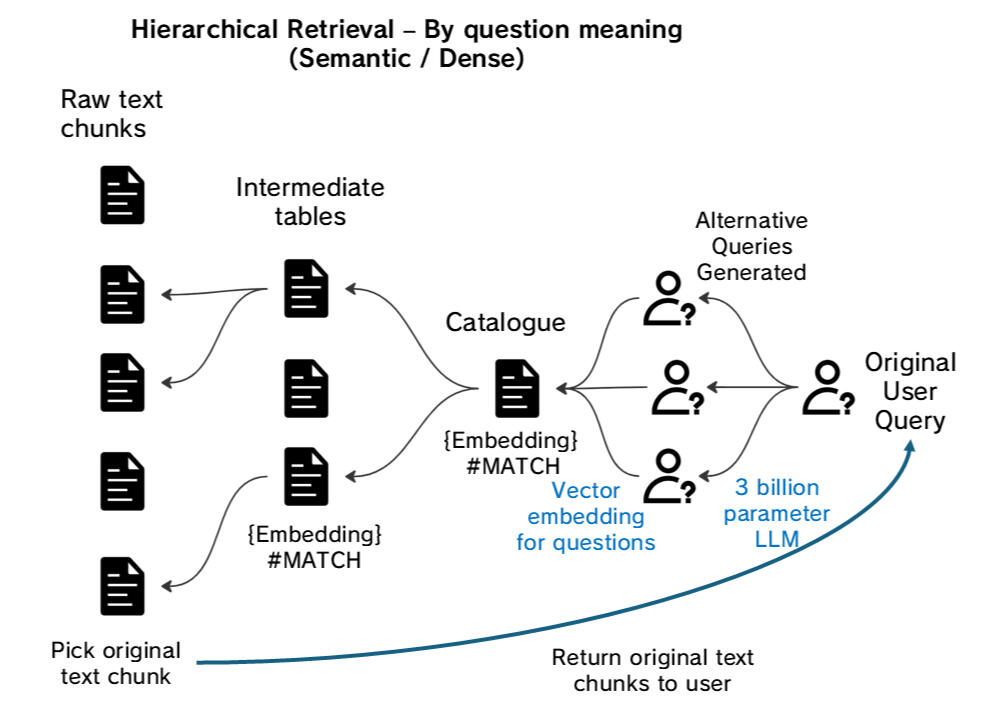

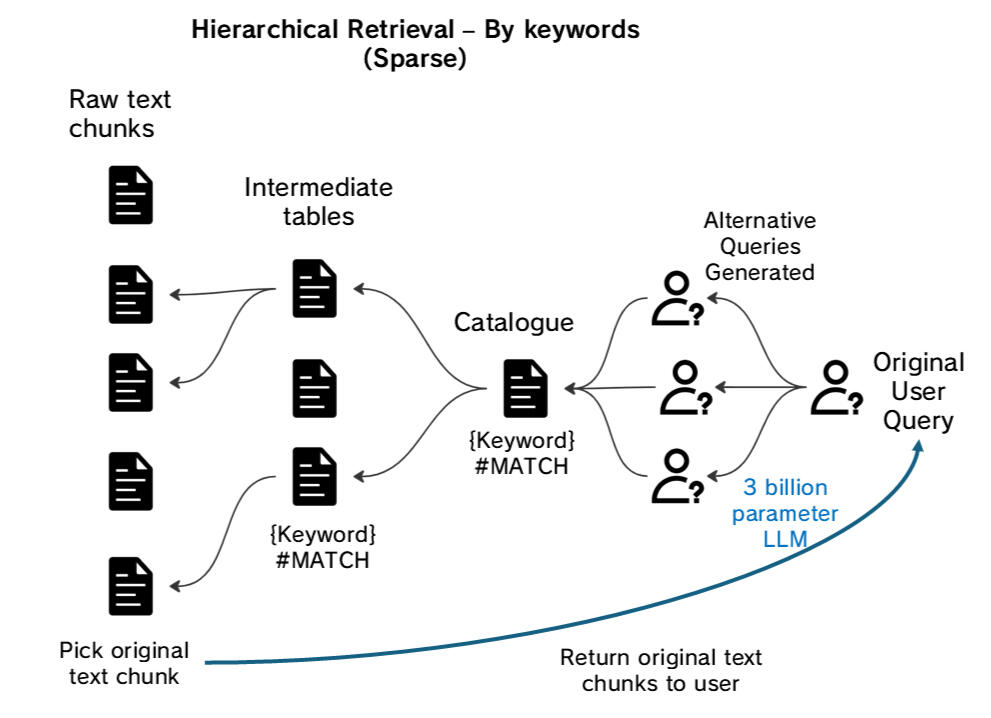

Retrieval Strategy: Smart Matching Before Heavy Lifting

Firstly we apply query expansion first:

LLM generates alternative queries to cast a wider semantic net

(e.g., "tail lamp" → "stop light", "rear light", "brake light")Next we look at semantic matches; matching the meaning of the question by embedding the question into a vector and checking the embedding with the vector database.Instead of searching each in the database, we can narrow down the relevant topics quite easily.Three-tier approach:

- Catalog matching: Expanded queries hit final summary metadata first

- Intermediate retrieval: Pull relevant batch summaries if needed

- Deep context: Access raw chunks + associated files from storage

Repeating the process for keywords matches only.

Ensure another avenue for matches to happen (in the event that it can't find a close enough "meaning" match).The balance between dense & sparse has to be fined tuned for the use case.

Production Implementation

Query flow:

- Lexical matching against aggregated keywords

- Semantic matching against summary embeddings

- Targeted deep-dive into relevant document tables only

- Schematics returned if relevant or available

Why This Approach Wins

Accuracy: Systematic processing eliminates hallucination from context overload

Scalability: Handles any document size; architecture grows with data volume

Auditability: Natural checkpoints for human review and verification

Performance: Intelligent pre-filtering reduces compute costs

Bottom Line

What started out as a quick and dirty demo turned into a fully fledged production pipeline. By systematically working through and optimizing the system design and architecture, we're now performing demos to clients with a scaled-down version of a production-ready pipeline.The feedback for all this extra work? Transparency, trust, attention to detail has been the consistent client response. Them seeing us as passionate about their data as they are is simply infectious.We've taken the classic philosophy of samurai armor and re-engineered it for the 21st century. Instead of layering heavy steel, we've used enterprise-grade data engineering to build a flexible chassis, then interlaced it with the lightest, most advanced AI. The result isn't just armor; it's a bespoke tactical suit optimized for the speed and precision of John Wick. It's brutally effective, yet because it's 80% data pipeline, it's also modular and adaptable to your changing business needs.

© Dennou AI. All rights reserved.

Casino Royale: The House Always Wins

The current AI landscape can be understood as a grand, glittering new casino. The "house" consists of the large LLM platform providers like Google and Microsoft/OpenAI, along with the major applications built on top of them.The "game" they offer is intoxicating: unparalleled productivity, instant analysis, and access to superhuman capabilities. The "chips" that businesses must bring to the table are their most valuable assets: their proprietary data, their confidential client information, their unique business processes, and the hard-won expertise of their people.

The Allure of the Casino Floor: The Short-Term Wins

When a business, like a law firm shares its data with an AI provider, it figuratively steps onto the casino floor, the initial experience is fantastic. The "house" makes it easy to play. The user interface is sleek, the results are immediate, and the small jackpots are frequent.

A junior associate drafts a complex contract in 30 minutes instead of five hours. That's a jackpot.

A team instantly summarises 500 pages of due diligence documents. That's a jackpot.

The business feels smart, modern, and efficient. They are winning hands, and the cost seems low... a simple subscription fee, like buying chips at the cage. This is the pragmatic, short-term gain that my inner "critic" correctly identified as compelling.

How The House Guarantees Its Win: The Long-Term Loss

But like any casino, this one is built on a mathematical certainty: in the long run, the house always wins. It achieves this not through a single rigged game, but through the fundamental architecture of the casino itself.

The House Watches Every Card You Play. You are right, the "promise of no retention... is just a promise." Even if we accept the technical argument that the content of your documents isn't permanently stored to train their models, that's not the only way the house learns. It logs the metadata of your game: what questions you ask, what problems you solve, what features you use. It sees every "tell," every brilliant move your subject matter experts make. It is building a perfect, planetary-scale understanding of your strategy.

The House Uses Your Strategy to Create a New, Unbeatable Game. This is the "Great Commoditization." After observing thousands of players solve due diligence or tariff classification, the house identifies the winning patterns. It then builds a new, automated game—an AI agent—that perfectly replicates that expert strategy. It offers this new, cheaper, automated game to everyone, including your clients. The expertise you revealed at the table has been captured, productized, and used to make your own high-value service obsolete. The house used your own chips to fund the R&D for a game you can't possibly win.

When You Suspect a Cheat, the House Investigates Itself. This is the "Investigative Black Hole" we discussed. If you suspect your M&A strategy, which you analysed at their tables, has leaked to a competitor at the next table, who do you complain to? The house's security team. You are bound by the house's rules—the "contractual fortress"—which limits their liability and places the impossible burden of proof on you. You can never truly know what happens "behind the curtain."

Stop playing at the public casino where the odds are stacked against you.

At your table, you owns the cards, the chips, and the room. The data never leaves your control.

Every hand played makes your "house" smarter. The expertise of your best players is captured in your own proprietary AI, creating a compounding asset.

You control the security cameras. The audit trail is yours alone. There is no "behind the curtain."

Let us help you build your own private table

© Dennou AI. All rights reserved.